Simple Linear Regression using R

Wikipedia says, linear regression is an

approach for modeling the relationship between a dependent variable y

and one or more explanatory variables (or independent variable) denoted X.

Regression uses the historical relationship between dependent and independent variables to predict the future value(s) of the dependent variable. Business applications of the regression model is to predict (future) sales, labor demand, inventory need, etc.

When only one independent variable is used in regression, it is called simple regression.

In this post, we ll see how to use this technique. This would be explained using R.

Auto Insurance in Sweden

Our primary objective is apply Linear Regression technique and to understand the relationship between the dependent and explanatory variable post which we ll try to answer the following questions.

- Determine a linear regression model equation to represent this data

- Decide whether the new equation is a "good fit" to represent this data

- Graphically represent the relation

- Doing a few predictions

Lets get into some action.

Please note: The data can be downloaded from here.

In the dataset, we have 63 observations of two columns each. The first column is "number of claims" which is our independent variable and the second column corresponds to "total payment for all the claims in thousands of Swedish Kronor" which is our dependent variable

Hence, x = number of claims and y = total payment for all the claims.

The next step is to import the data in R.

Taking a closer look at the data.

It always helps to visualize data which can be achieved using some of the R functions.

Now, let us try to build the model using R (which makes the job of finding the intercept and the slope very easier).

lmfit <-

lm(TotalPayments ~ NoofClaims, data = SwedenAutoIns)

By applying the lm() function on the two variables we are trying to build a linear model on those variables.

Imagining fitting a straight line that passes through these data points which has the minimum value for the sum of the squares of the errors.

The summary is a very important tool to understand the relationship between the variable and to decide if this model is a "good fit".

R2 (R-squared) is a statistic that will give some information about the goodness of fit of a model. An R2 of 1 indicates that the regression line perfectly fits the data.

The R2 value of 0.8333 here means, ~83% of the total variation in y (Total Payments) can be explained by the linear relationship between x & y.

The linear equation the model has given us is

y = 19.9945 + 3.4138 * x

Now we can go ahead to make our predictions for y by applying this model for different values of x.

Before predicting y lets take a moment to visualize the model along with the data points.

Predict the values of y using the model:

Predicting y is simple now as we have got the linear equation. For the "Number of Claims" 75 and 150 we ll apply this model and find the "Total Payments"

When

x = 75, y =19.9945 + (3.4138 * 75) = 276.0295 Thousand SEK

x = 150, y = 19.9945 + (3.4138 * 150) =532.0645 Thousand SEK

Alternatively, R can also help us make the "Prediction" easier and here are the steps.

R has predicted the y values as 276.0313 Thousand SEK & 532.0680 Thousand SEK respectively.

Complete R code can be found here.

Conclusion

Let us quickly check if we were able to achieve our objectives completely by answering the questions that we had initially.

- Determine a linear regression model equation to represent this data

- Yes. We applied the linear regression model and got the mathematical equation to represent this data

- Decide whether the new equation is a "good fit" to represent this data

- Yes. We were able to decide the equation which was a "good fit" based on the R-squared value

- Graphically represent the relation

- Yes. We did this using R

- Doing a few predictions

- Yes. We successfully predicted Total Payments for a couple of new Claim counts

Closing Comments

How do we know what is allowed for prediction?

Usually, the values predicted between the observed data points would be reliable (in general) and the values predicted beyond the observed data points are *not*. (So, we need to take the y value for x = 150 with a pinch of salt.)

A regression line tells us the trend among the data that we see, it tells us nothing of the data beyond.

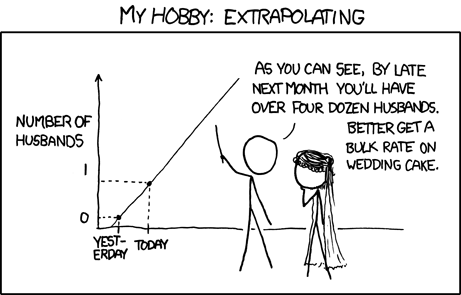

If you extrapolate beyond the observed data points, you may get absurd results.

Source: xkcd.com/605

it is very useful and neatly explained to understand a concept on simple linear regression

ReplyDelete